Introduction

Documents identified by computer forensic investigations in civil litigation typically require review and analysis by attorneys to determine if the uncovered evidence could support causes of action such as breach of contract, breach of fiduciary duty, misappropriation of trade secrets, tortious interference, or unfair competition. In addition, bit-for-bit forensic imaging of workstations is also commonly used as an efficient method to quickly gather evidence for further disposition in general commercial litigation matters. For example, instead of relying upon individual custodians to self-select and copy their own files, forensic images of workstations can be accurately filtered down to exclude system files, which only a computer can understand, and identify files which humans do use such as Microsoft Word, Excel, PowerPoint, Adobe PDF files and email. In any of the above situations, be it a trade secrets type matter or a general commercial litigation case, litigants are always highly sensitive to the potential costs associated with attorney review.

Now that Microsoft Windows 8 workstations are available for sale and will likely be purchased for use by corporate buyers, civil cases involving the identification and analysis of emails from such machines is a certainty. Recently, excellent computer forensic research on Windows 8 performed by Josh Brunty, Assistant Professor of Digital Forensics at Marshall University revealed that “In addition to Web cache and cookies, user contacts synced from various social media accounts such as Twitter, Facebook, and even e-mail clients such as MS Hotmail are cached with the (sic Windows 8) operating system” (source: http://www.dfinews.com/article/microsoft-windows-8-forensic-first-look?page=0,3). Building on Professor Brunty’s scholarship, I set out to determine the extent, amount, and file formats email communications exist on a Windows 8 machine. In addition, a goal was to identify any potential issues for processing locally stored communications for attorneys review in the discovery phase of civil litigation.

As you will see, the format in which Windows 8 stores email locally does in fact present potentially significant challenges to cost effective discovery in both trades secret type matters as well as general commercial litigation cases. Fear not as my conclusion offers some potential solutions as well as other important considerations. I have written this article in detailed steps so that others might more easily duplicate my results.

Testing

My testing was performed on the Release Preview version of Windows 8, so I will be upgrading the subject workstation to the current retail version, re-running my tests and reporting the results in a later publication.

1. Subject Workstation “Laptop”

- Manufacturer: Dell Latitude D430

- Specifications: Intel Core 2 CPU U7600 @ 1.20GHz / 2.00GB Installed RAM /

- OS: Windows 8 Release Preview / Product ID: 00137-11009-99904-AA587

- HARD DRIVE: SAMSUNG HS122JC ATA Device / Capacity 114,472 MB

2. Windows 8 Installation

The Dell Laptop originally came with Windows XP Professional installed, but I replaced XP with Windows 8 Release Preview (“W8”) using an installation DVD burned from the W8 .ISO file provided by Microsoft’s website.

3. Windows 8 Preparation

I created a single user account called “User” with a password of “password”. After the W8 initiation phase ended, I was presented with the new “tile” interface, which is much more akin to an iPhone, iPad, Android metaphor. Unfortunately, my Dell laptop did not enjoy a touch screen that would have allowed me to take more advantage of the tiles. Even on this older machine, the built in track pad and other mouse controls all worked perfectly out of the box, so I was able to proceed with installing various communication applications.

A. Connecting the Windows 8 laptop to web based accounts

On W8’s default new tile screen, there are three key tiles I began with; “People”, “Messaging” and “Mail”. Within the “People” tab, I connected my contacts to my Microsoft, Facebook, LinkedIn and Google accounts. Connecting to these external accounts brought in a flurry of contact profile pictures, email addresses, phone numbers, physical addresses, company name, job title and website from LinkedIn. Interestingly, my own record, “Me”, did not import a profile picture from any of my online accounts, leaving a generic silhouette tile. Perhaps LinkedIn, Gmail and Facebook are excluded from choosing my local Windows 8 profile by Microsoft. I do not have a profile picture associated with my Microsoft Live account, which might be the cause of the missing profile picture.

Below is the end-user view under the Windows 8 “Mail” tile showing imported emails from my Google Gmail account:

- Inbox: 34

- Drafts: 0

- Sent items: 15

- Outbox: 0

- Junk: 0

- Deleted items: 22

- [Gmail] / All Mail: 34

- [Gmail] / Spam: 0

- [Gmail] / Starred: 2

- [Gmail] / FORENSIC: 1

- [Gmail] / Receipts: 0

- [Gmail] / Scarab: 2

- [Gmail] / Travel: 0

4. End User Installed Applications

I installed the following four applications on the laptop:

A. Programs recorded by the Control Panel:

1. Adobe Flash Player 11 Plugin ver. 11.4.402.287

2. Google Chrome ver. 23.0.1271.64

3. Mozilla Firefox ver. 16.0.2 (x86 en-US)

B. Programs listed under Windows 8’s “Store” tile:

1. Tweetro (I did not link to any Twitter account)

2. Xbox Live Games (using Microsoft account user name “larry_lieb@yahoo.com”)

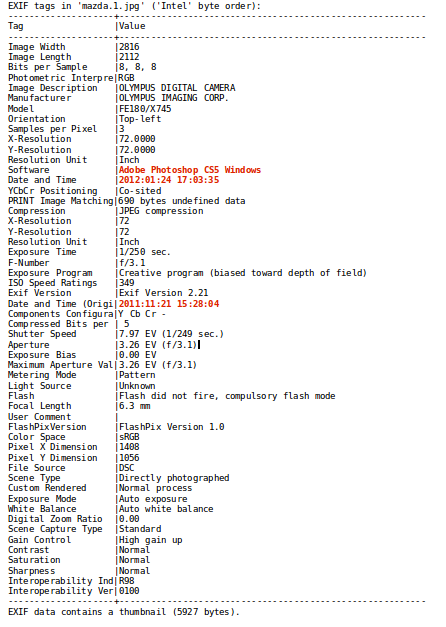

Using the Chrome browser, I logged into my Google account and installed “Gmail Offline” to see what effect this add-on would have. After installing “Gmail Offline”, the Chrome icon now appears in the system tray by default when viewing the Desktop. I then logged in to a newly created Yahoo account, which I called “larry.lieb@yahoo.com”. I sent and received several emails both two and from my Yahoo/Gmail accounts. While logged into my Yahoo.com email account, I imported contacts from my LinkedIn account. Now that I had created multiple sources of email and instant message correspondences, I set about imaging the laptop.

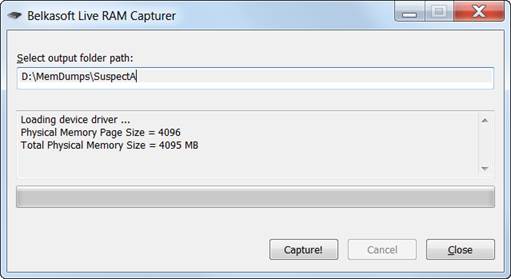

5. Forensic Imaging

I used Forward Discovery’s Raptor 2.5 (http://forwarddiscovery.com/Raptor) installed to a USB flash drive from the Raptor 2.5 .ISO file using Pendrivelinux.com’s free USB Linux tool. I changed the boot order to USB drive first which then caused the laptop to boot the Raptor 2.5 operating system instead of Windows 8.

Within Raptor 2.5, I used the Raptor Toolbox to first mount a previously wiped and formatted external Toshiba hard drive, which was connected to the laptop via a USB cable. The total imaging and image verification process took close to eleven hours due to the slow USB connection. The internal Samsung hard drive uses a ZIF zero insertion force connector, so although I may have been able to achieve a faster imaging time using my Tableau ZIF to IDE tool (http://www.tableau.com/index.php?pageid=products&model=TDA5-ZIF), I was loathe to tempt equipment failure as Tableau states, “ZIF connectors are not very robust and they are typically rated for only 20 insertion/removal cycles.” In addition, the Tableau kit only comes factory direct with Toshiba and Hitachi cables, which would not work with the Samsung drive.

6. Indexing

Using Passmark’s OSForensics ver. 1.2 Build 1003 (64 Bit) on my Digital Intelligence µFred forensic station (http://www.digitalintelligence.com/products/ufred/), I created an index of the Windows 8 files contained within the Raptor 2.5 created Encase evidence files. OSForensics was able to create an index of the entire contents in around one hour.

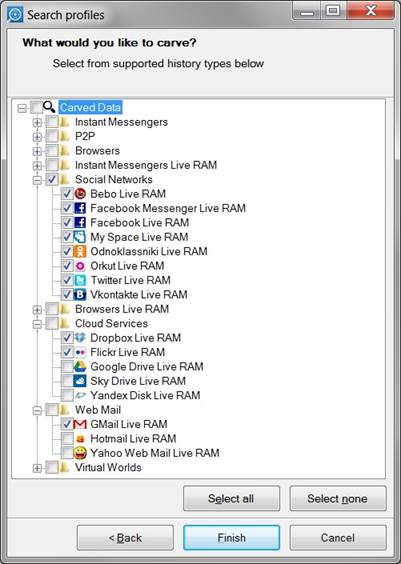

Under OSForensics’ “File Name Search” tab, I ran searches for common email file types. Out of 142,712 total items searched, OSForensics identified:

A. 2,204 items using the search string “*.eml”

B. 0 items using the search string “*.msg”

C. 0 items using the search string “*.pst”

D. 0 items using the search string “*.mbox”

Using OSForensics “Create Signature” tab, I was able to run and export a Hash value and file list report for the folder “1:\Users\User\AppData\Local\Packages\”.

7. .EML files

Using AccessData’s FTK Imager 3.1.1.8, I exported the contents of the folder path, “Users\User\AppData\Local\Packages\microsoft.windowscommunicationsapps_8wekyb3d8bbwe\LocalState\Indexed\LiveComm\larry_lieb@yahoo.com\”.

I noticed that there are two interesting folders that might warrant different treatment for electronic discovery projects:

A. Location of folder storing .EML files containing email communication:

OSForensics found 264 .EML files under the “Mail” folder path:

“microsoft.windowscommunicationsapps_8wekyb3d8bbwe\LocalState\Indexed\LiveComm\larry_lieb@yahoo.com\120510-2203\Mail\”

B. Location of folder storing .EML files containing contacts:

OSForensics found 1,939 .EML files under the “People” folder path:

“microsoft.windowscommunicationsapps_8wekyb3d8bbwe\LocalState\Indexed\LiveComm\larry_lieb@yahoo.com\120510-2203\People\”

C. Location of folder storing my “User” .EML contact file:

OSForensics found 1 .EML files under the “microsoft.windowsphotos \..\People\Me” folder path that contains my “User” profile:

“Users\User\AppData\Local\Packages\microsoft.windowsphotos_8wekyb3d8bbwe\LocalState\Indexed\LiveComm\larry_lieb@yahoo.com\120510-2203\People\Me”

Conclusion

In electronic discovery projects that utilize forensic imaging tools to capture workstation hard drives, it is common for data filtering to be requested such as D-NIST’ing, file type, key word, date range and de-duplication. Often times, a file type “inclusion” list will be used to identify “user files” for further processing such as Microsoft Word, Excel, Powerpoint, Adobe PDF, and common email file types such as .PST, .MSG., and .EML. Files found in the forensic image(s) will be exported for further processing and review by attorneys.

One of the challenges attorneys face in electronic discovery is reasonably keeping costs low by avoiding human review of obviously non-relevant files. However, as Windows 8 appears to be storing contacts from LinkedIn, Gmail, and other sources as .EML files, it is apparent that using file type filtering inclusion lists with .EML as an “include” choice, will bring in many potentially non-relevant files.

If an attorney is billing at a rate of $200/hour, and can review fifty documents per hour, then the 1,938 “contact” .EML files alone would require 38.78 hours of attorney review time at a cost to the client of $7,756.00. Therefore, it may make sense for all parties to stipulate that .EML files from the “People” folder be excluded from processing and review unless the hard drive custodian’s contact list is potentially relevant to the underlying matter.

In some cases, litigants do not or cannot pay for outside vendor electronic discovery processing fees and will direct their counsel to simply produce their electronically stored information. I advise against this practice as the potential for producing privileged or protected information exists with this approach. A requesting party may also object to the costs de facto shifted to them with this approach. Nonetheless best practices and economic reality do not always mesh. Parties that wish to take this “no attorney review prior to production” approach with evidence gathered from Windows 8 machines may risk over producing the “contact” EML files to their opponent and should consider the risks associated with not allowing a professional to apply filters to their collection upfront.

Companies that are planning on purchasing and implementing Windows 8 workstations may want to consider altering their IT policies to prevent employees from linking to personal Gmail, LinkedIn and other web based identities to prevent personal communication from being stored locally. I am uncertain if such an option is available within the administrative portion of the Windows 8 operating system, or if employee handbooks and training alone might be available to stop employees from bringing their home to work.

From an ease of trade secrets type computer forensic investigation standpoint, having a suspected former employee’s Gmail communication locally and readily available is excellent; certainly this ease of access is preferable to sending a subpoena to Google to retrieve similar information. However, from this author’s personal experience, general commercial litigation type cases in general vastly outnumber cases involving traditional computer forensic issues. Perhaps companies who take steps to proactively prevent Windows 8 machines in the corporate environment from caching their employee’s personal communication locally may experience significantly less expensive discovery costs in the long run.

Acknowledgments

1. Josh Brunty, Assistant Professor of Digital Forensics at Marshall University

2. David Knutson and Tim Doris of Duff & Phelps for their sage opinions on Linux live CD versus ZIF connector to a hardware write-protection device acquisition approaches.

3. Patrick Murphy and Raechel Marshall of Quarles & Brady for their insight into document production risks.

About

Larry Lieb, ACE, CCA

CIO

Scarab Consulting (www.ConsultScarab.com)

LINK TO PDF: Windows 8 Computer Forensics and Ediscovery Considerations

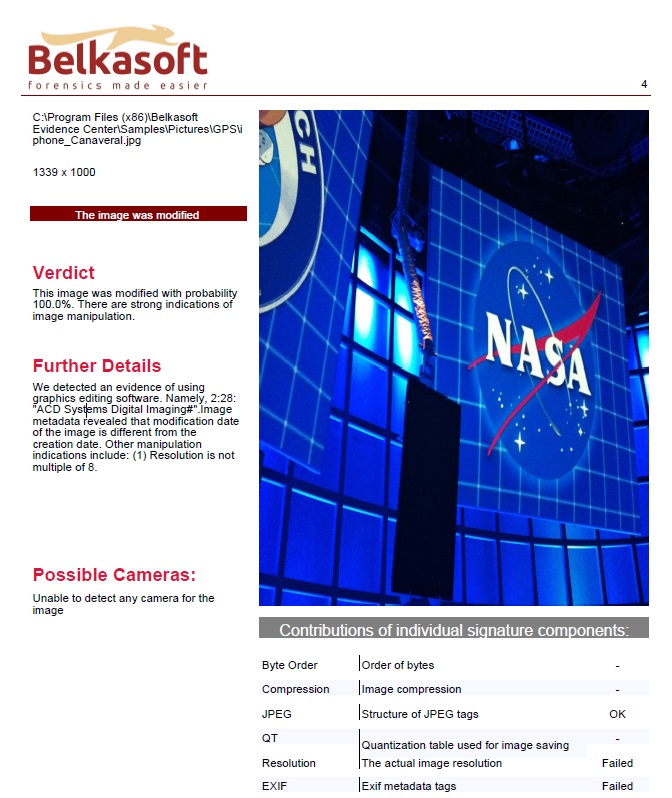

Image may be NSFW.

Clik here to view.

Clik here to view.